Result In this article we will discuss some of the hardware requirements necessary to run LLaMA and Llama-2 locally. The performance of an Llama-2 model depends heavily on the hardware its. Result import torch from transformers import AutoModelForCausalLM AutoTokenizer pipeline from peft import LoraConfig PeftModel. Result Llama 2 is an auto-regressive language model built on the transformer architecture Llama 2 functions by taking a sequence. Result Minimum hardware Requirements to run the models locally..

All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1 Llama 2 encompasses a series of. RTX 3060 GTX 1660 2060 AMD 5700 XT RTX 3050 AMD 6900 XT RTX 2060 12GB 3060 12GB 3080 A2000. How much RAM is needed for llama-2 70b 32k context Hello Id like to know if 48 56 64 or 92 gb is needed for a cpu. Even higher accuracy resource usage and slower inference. Explore all versions of the model their file formats like GGML GPTQ and HF and understand the hardware requirements for local..

LLaMA-2-7B-32K is an open-source long context language model developed by Together fine-tuned from Metas original Llama-2 7B model. WEB Today were releasing LLaMA-2-7B-32K a 32K context model built using Position Interpolation and Together AIs data recipe and system optimizations including FlashAttention. WEB Llama-2-7B-32K-Instruct is an open-source long-context chat model finetuned from Llama-2-7B-32K over high-quality instruction and chat data. WEB In our blog post we released the Llama-2-7B-32K-Instruct model finetuned using Together API In this repo we share the complete recipe We encourage you to try out Together API and give us. WEB Last month we released Llama-2-7B-32K which extended the context length of Llama-2 for the first time from 4K to 32K giving developers the ability to use open-source AI for..

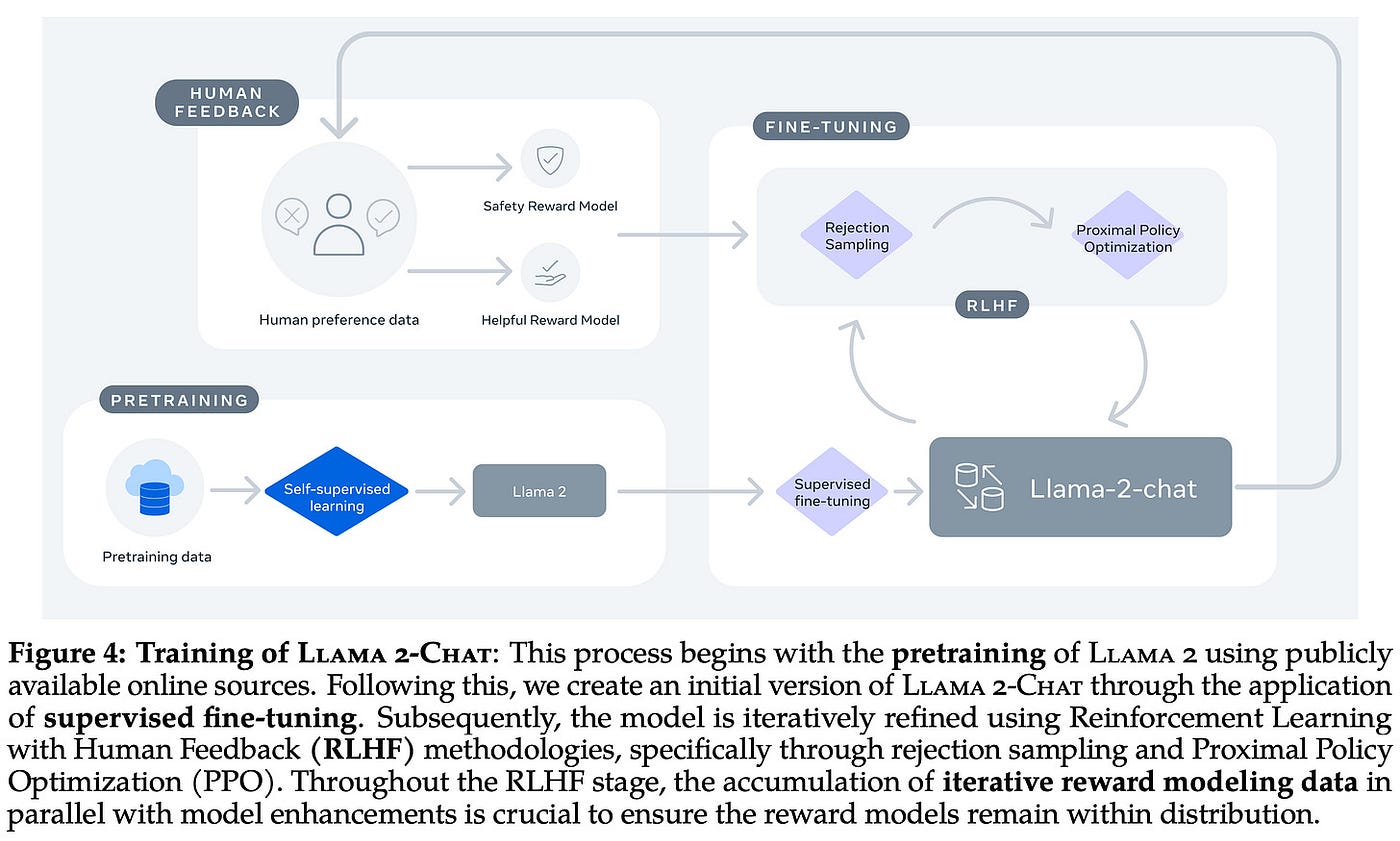

What are the hardware SKU requirements for fine-tuning Llama pre-trained models Fine-tuning requirements also vary based on amount of data time to complete fine-tuning and cost constraints. In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters on a T4 GPU. In this article we will discuss some of the hardware requirements necessary to run LLaMA and Llama-2 locally There are different methods for running LLaMA models on. Key Concepts in LLM Fine Tuning Supervised Fine-Tuning SFT Reinforcement Learning from Human Feedback RLHF Prompt Template. Select the Llama 2 model appropriate for your application from the model catalog and deploy the model using the PayGo option You can also fine-tune your model using MaaS from Azure AI Studio and..

Komentar